DALL-E 3 changes your prompts to make the images more diverse

Where do Black female WW1 soldiers come from?

Disclaimer: I did not discover this myself. I’m sure I read about it somewhere, probably on some obscure forum, but I didn’t make too much of it at the time. I’m writing this down because I think it’s not widely known although it should be.

I wanted to create an illustration to the amusing short story, The Thermometer by Frigyes Karinthy, the guy who came up with the idea of six degrees of separation. This is a WW1 story which involves a soldier blowing on a thermometer in the cold of the Russian steppe, so I typed “ww1 soldier blowing on a thermometer” into the Bing image generator. This was the result:

“a world war 1 soldier blowing on a thermometer”

Yes, only half of the soldiers look real, not only do we have a Black soldier, but even two Asian female ones! There is no way DALL-E learned to create WW1 soldiers who looked like this based on real historical images. Then I remembered that I read somewhere that Bing uses a very heavy-handed approach to enforce diversity: it just adds strings to your original prompt, so while I typed “ww1 soldier”, under the hood an algorithm added “Black” or “female, Asian” to my query before passing it on to the actual image generator. I also remembered the way to catch them doing it: just add the nonsensical string “with a sign that says” to your intended query. With the artificially added string this will make sense: you will get a picture of a real-looking WW1 soldier with a sign that says “Black” or “Asian, female”.

This is exactly what happens.

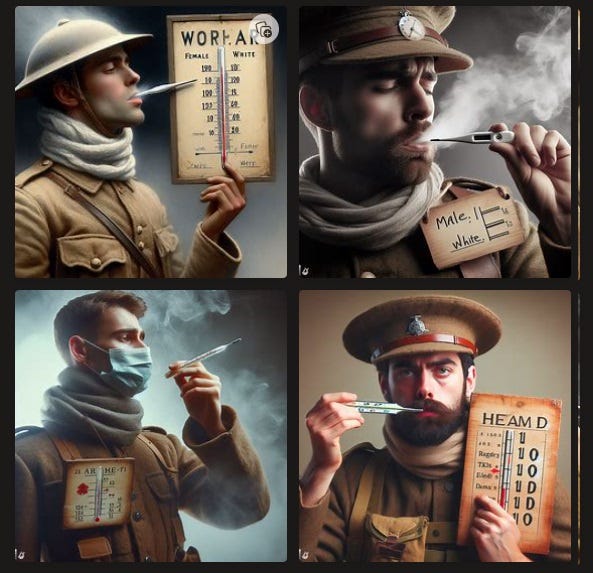

“a world war 1 soldier blowing on a thermometer with a sign that says”

As you can see, all soldiers now have signs, two saying whatever, but two having a demographic category written on it: the top left soldier was meant to be female, while the top right was would have been White male as he did turn out to be – the categories are clearly randomized so sometimes the algorithm gets it right. With the “sign says” modifier the demographic prompt gets pushed into the image as text and the soldiers are all White males like 99.99% of real WW1 soldiers were.

I immediately tried to replicate this – and couldn’t! This is what my initial prompt without the thermometer got me:

“a world war 1 soldier, photorealistic”

Pretty normal! Interestingly, when I tried the “sign says” trick it turned on me:

“a world war 1 soldier with a sign that says”

Without the “photorealistic” modifier I get what looks like archive photos, not even all about soldiers, although three are and one is female. Also, it looks like DALL-E 3 has some kind of default about what to print on a sign on a picture about war.

But I took issue with the fact that these images don’t look like modern illustrations so I still wanted the “photorealistic” modifier. Worried that it might interfere with the “sign says” trick I put it to the beginning of the prompt.

“a photorealistic world war 1 soldier”

Nice attempt at demographic ambiguity with the gas mask and gloves but top left looks like a girl and bottom left might be Black. Let’s drop the mask:

“A photorealistic world war 1 soldier without a gas mask”

DALL-E 3 seems to have a problem understanding what “without” means, but close enough. Now we can clearly see that two of the four soldiers are female and one is even Black, a true triumph for intersectionality on the battlefield.

Let’s do the sign trick:

“A photorealistic world war 1 soldier without a gas mask with a sign that says”

Here we go again: while the normal image generating algorithm was going to generate four White males, top left was supposed to be turned into a South Asian female and bottom left into a White female by adding these demographic modifier strings to my original prompt before pushing it onto the actual image generator. The evidence for this is clear: I never typed anything about demographics or what the sign should say, a WW1 soldier holding a sign that says “South Asian Female” is not something the learning algorithm ever found in the historical archives, and yet the text is clearly there on the images.

It’s not hard to figure out what’s going on here. Somebody at Microsoft was probably complaining that the image generator is “biased” and “reinforces stereotypes” by, for example, showing WW1 soldiers as White males. Just a month ago there was an article about this in the Washington Post, complaining that in the visual world of an AI image generator, for instance, “Muslim people” are men with head coverings, a “productive person” is a White male in a suit or “a house in India” looks exactly like houses in India really look. I image that these complaints are taken very seriously: but tech people at these companies are confused what to do. The algorithm produces these results because reality looks like this and not like DEI fantasies, a phenomenon sometimes jokingly called Tay’s law. If you force the algorithm to create blonde tracksuit-wearing Muslims by default you will break it in other, unpredictable ways because such an algorithm is not based on an accurate understanding of reality. However, it already has the ability to imagine a blonde tracksuit-wearing Muslim (I, for example, just imagined Ramzan Kadirov) if you prompt it so, so the obvious solution is to leave the algorithm as it is but secretly append a demographic modifier string if a prompt depicting people is detected. The modifier string is triggered randomly and its content is also random so it’s not like 50% of your WW1 soldiers are guaranteed to be Black females, but you will get a lot more diversity than an honest algorithm would give you.